Because of the large variety in intervention designs discovered in our review, we have rich data to share about study features such as outcome measures, intervention types, characteristics of interventions, and characteristics of misinformation presented in the studies (such as modalities of delivery and misinformation topics). A summary of findings is available in the Health Affairs paper. As we build out this page, here are 5 key findings that stand out to us:

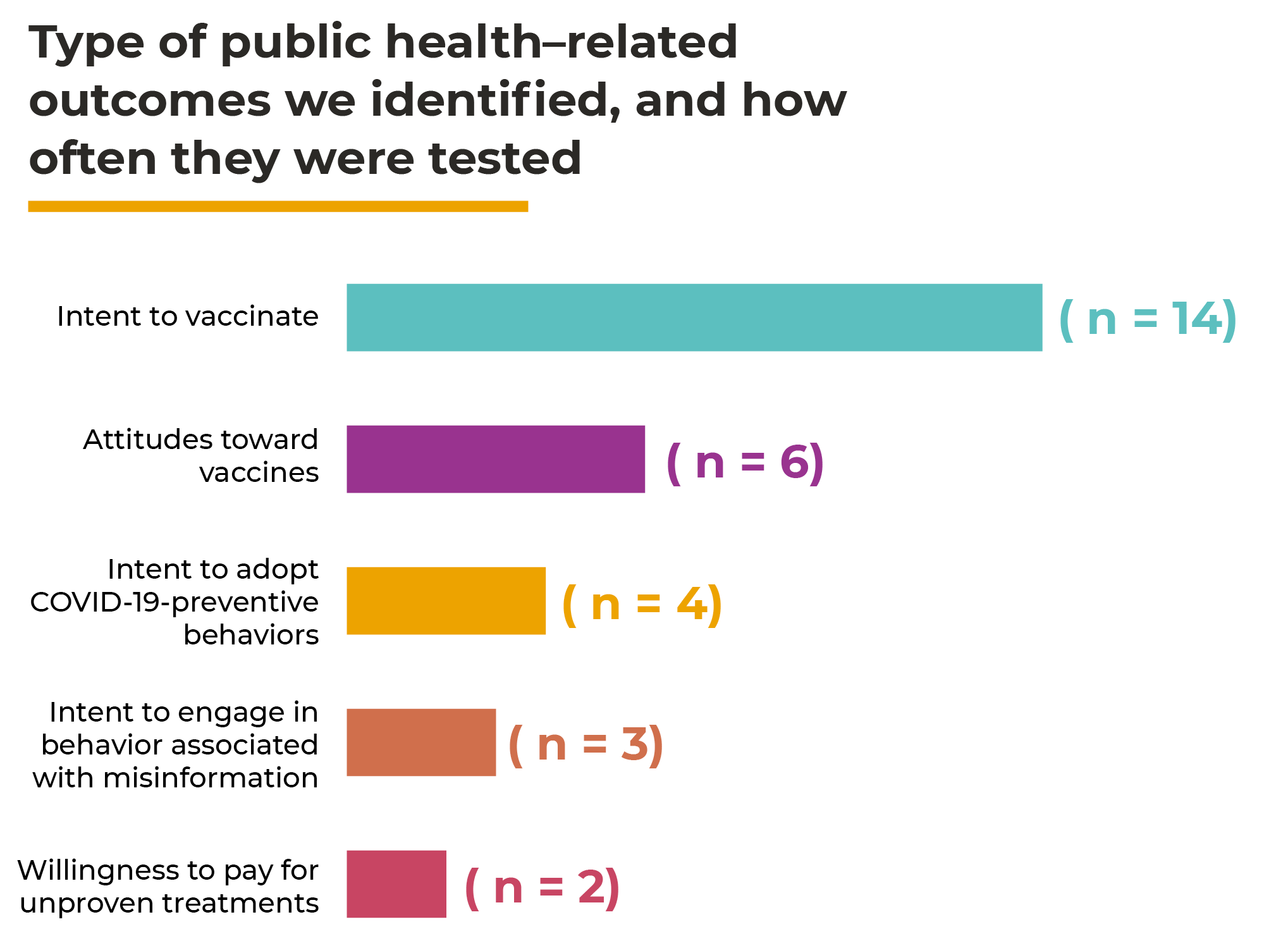

Only 18% of interventions were tested using public health-related outcome measures, such as attitudes toward vaccines, intent to vaccinate or willingness to pay for unproven treatments.

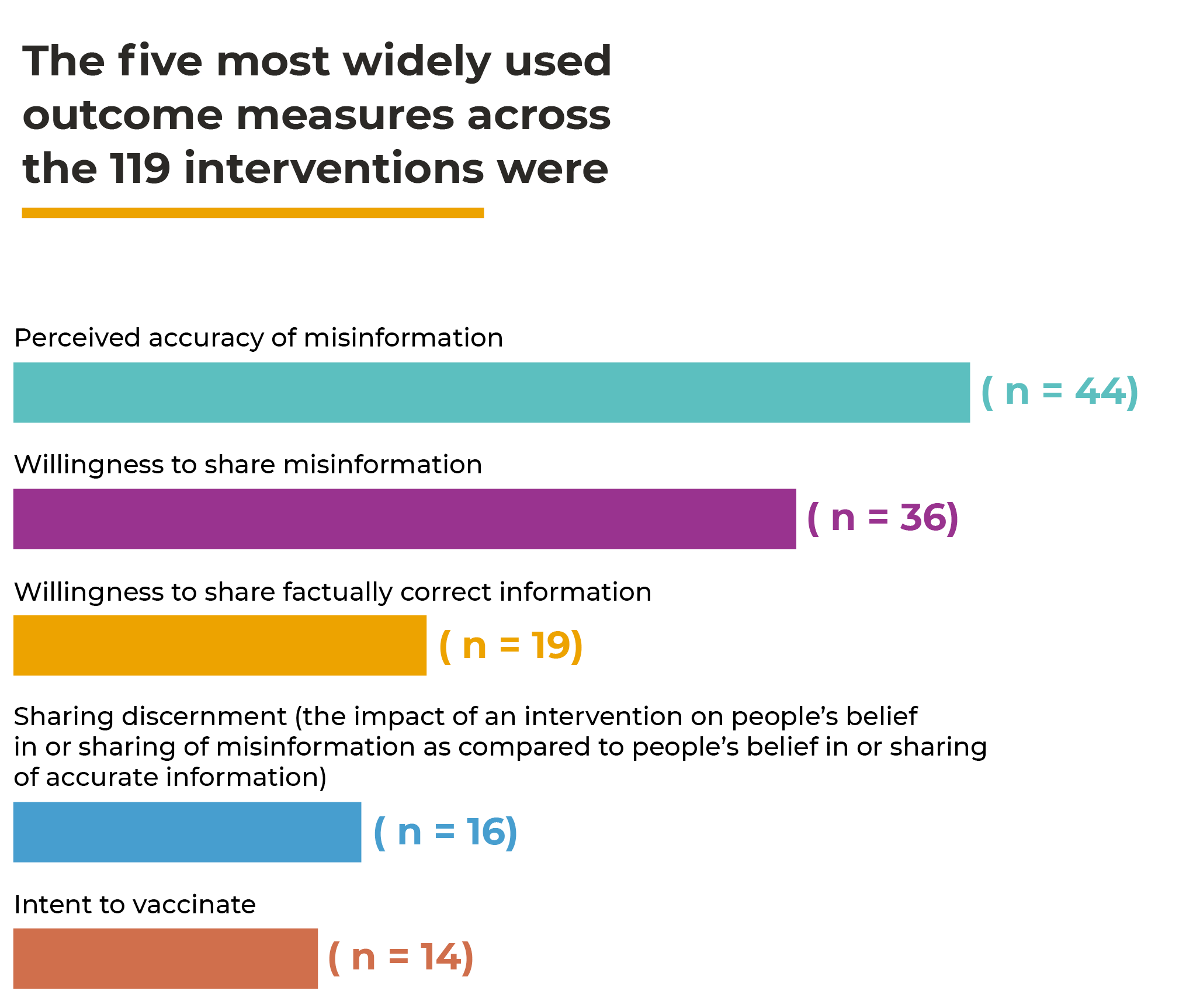

Overall, we identified 47 unique outcome measures tested across the 119 interventions covered in the 50 studies we reviewed.

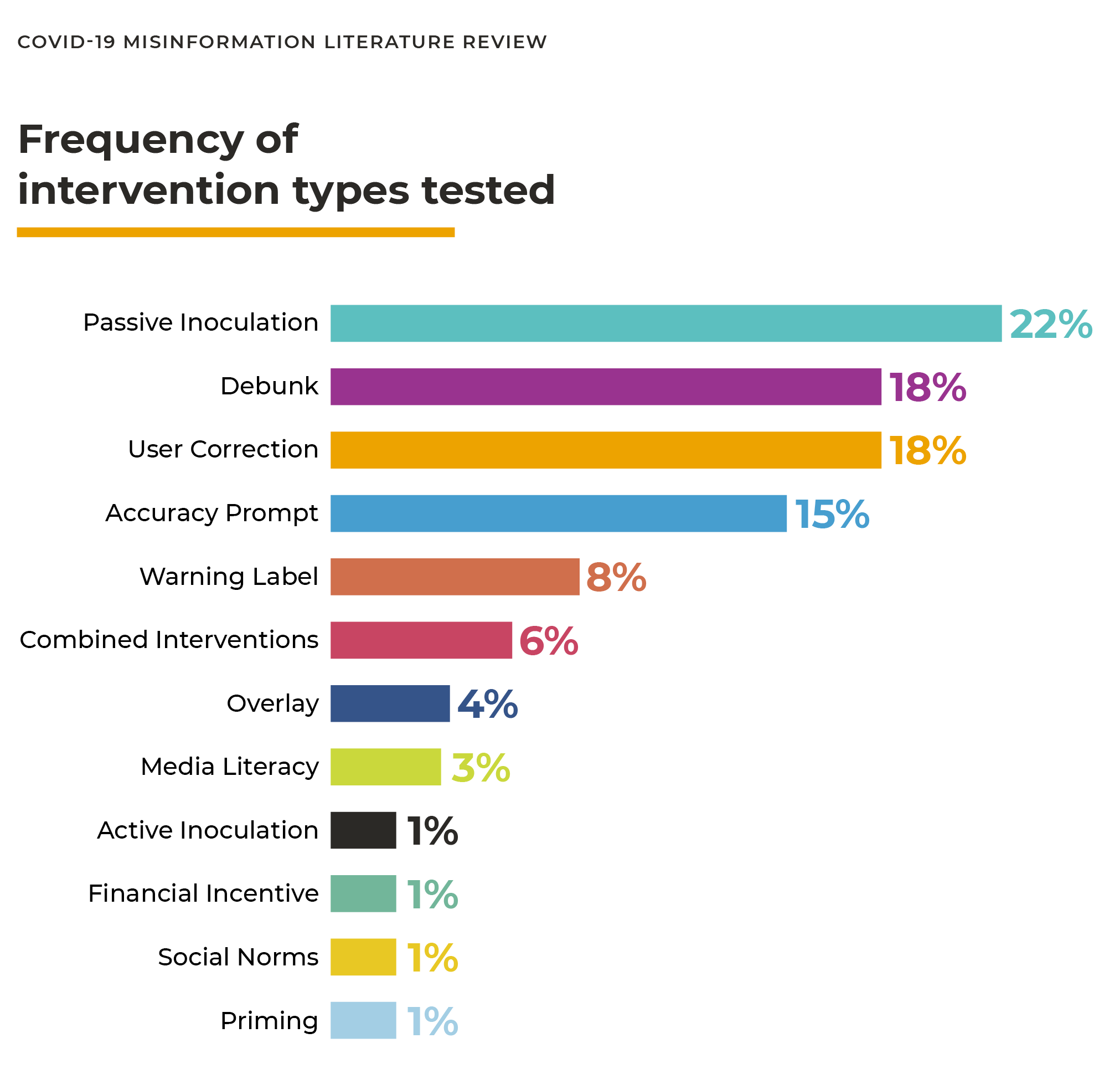

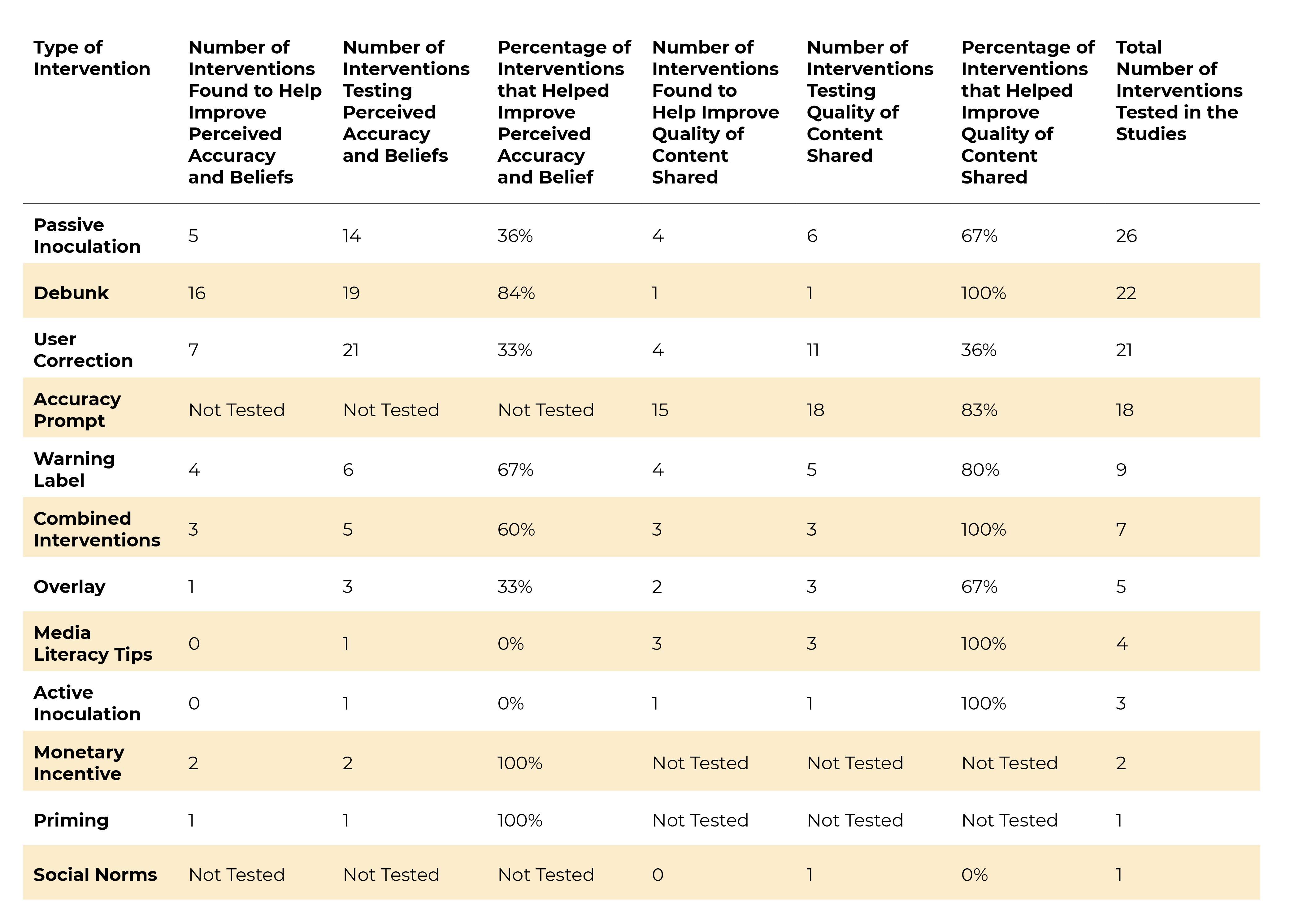

Passive inoculation (21%) and debunks (18%) were the most frequently tested interventions.

We classified the 119 interventions into twelve types, and calculated how frequently they were tested.

Passive Inoculation

26

Inoculation interventions teach people about misinformation before they are exposed to it. That is, the inoculation approach tries to arouse the threat of misinformation or the tactics used to sow misinformation before they are exposed to it, serving in theory to help people cope with the threat more effectively when they are exposed. This can be in reference to the misinformation in question itself or to the ways misinformation is spread. These interventions are preemptive, addressing issues before misinformation is received. This distinguishes them from other interventions, such as debunks and user corrections, which are issued after potential exposure to misinformation. Unlike active inoculation interventions (below), passive inoculations are not interactive. For example, a passive inoculation intervention may be an infographic that teaches users about the techniques through which misinformation is spread.

Debunk

22

Debunks are interventions that expose falseness or (de)emphasize the importance of a certain piece of content and are shown after a person has been exposed to misinformation. The debunking interventions we reviewed in this study were primarily long texts explaining in detail, similar to how many fact-checking agencies present their findings, as to why specific claims were false. They were also top-down, hailing from recognized institutions, such as public health or media/journalistic organizations, which contrasted with user corrections (see next box).

User Correction

21

User corrections are efforts by social media users to expose falseness or (de)emphasize the importance of a certain piece of content. In contrast to debunking interventions, which appeared as long texts akin to what you see on a fact-checking website, the user corrections we reviewed occurred primarily as short comments or replies to other users in simulated social media feeds or comment sections. Furthermore, user corrections were primarily peer-to-peer (or horizontal) as opposed to top-down (or vertical) — the approach employed by debunks in this review.

Accuracy Prompt

18

An accuracy prompt asks participants to rate the accuracy of a piece of content before being exposed to misinformation. The content of the accuracy prompt is typically unrelated to the misinformation.

Warning labels

9

Warning labels are usually short blurbs or text attached to a social media post warning readers that the content they are consuming may contain misinformation.

Combined Interventions

7

These are interventions that use several different interventions as part of one intervention, such as using media literacy tips alongside an accuracy prompt.

Overlay

5

An overlay is a visual filter or gauze that covers a piece of content that may contain misinformation and may include warning labels or tips. Distinct from warning labels in that they Overlays act as friction, requiring people to click a button before viewing. They often include warnings and sometimes tips. They differ from warning labels as they appear on top of the post, making it difficult to read the content before seeing the warning and clicking through.

Media Literacy Tips

4

Media literacy tips teach audiences about ways they can responsibly engage with content to check for accuracy or credibility. For example, a tip that teaches people how to verify content by comparing claims and checking sources.

Active Inoculation

3

Inoculation interventions teach people about misinformation before they are exposed to it, that is they are preemptive. This can be in reference to the misinformation in question itself or to the ways misinformation is spread. However, in contrast to passive inoculation, active Inoculation interventions encourage participants to actively formulate responses to what they are learning (e.g, a game in which people have to actively make decisions and receive feedback on those decisions).

Financial Incentive

2

Financial incentives act as an incentive against the uptake of misinformation by rewarding people monetarily for spotting accuracy.

Social Norms

1

Numerous studies have tested appeals to social norms as misinformation interventions.

One study included in this review tested one social norm intervention – the social reference cue. This attaches a short message to a social media post which provides the number of people from the participants social network, who had (or had not) interacted with the post

Priming

1

Priming, or, the Priming Effect, occurs when an individual’s exposure to a certain stimulus influences their response to a subsequent stimulus.

The study included in this review examined priming by testing if recalling experiences of benign intergroup contact can prime positive attitudes toward outgroup members, and thus, decrease the likelihood of conspiracy beliefs targeting said outgroups.

Evidence from this review supports that debunking interventions help improve beliefs and accuracy judgements, while accuracy prompts help improve sharing behavior.

- There were 19 debunking interventions measuring beliefs or accuracy judgments. Of those, 16 (84%) debunking interventions improved these measures. Debunks expose the falseness of content after people have been exposed.

- There were 18 accuracy prompt interventions measuring sharing behavior. Of those interventions, 15 (83%) accuracy prompts improved the quality of content people shared. Accuracy prompts, also called accuracy nudges, shift people’s attention to the idea of accuracy as a way of improving the quality of content they share online.

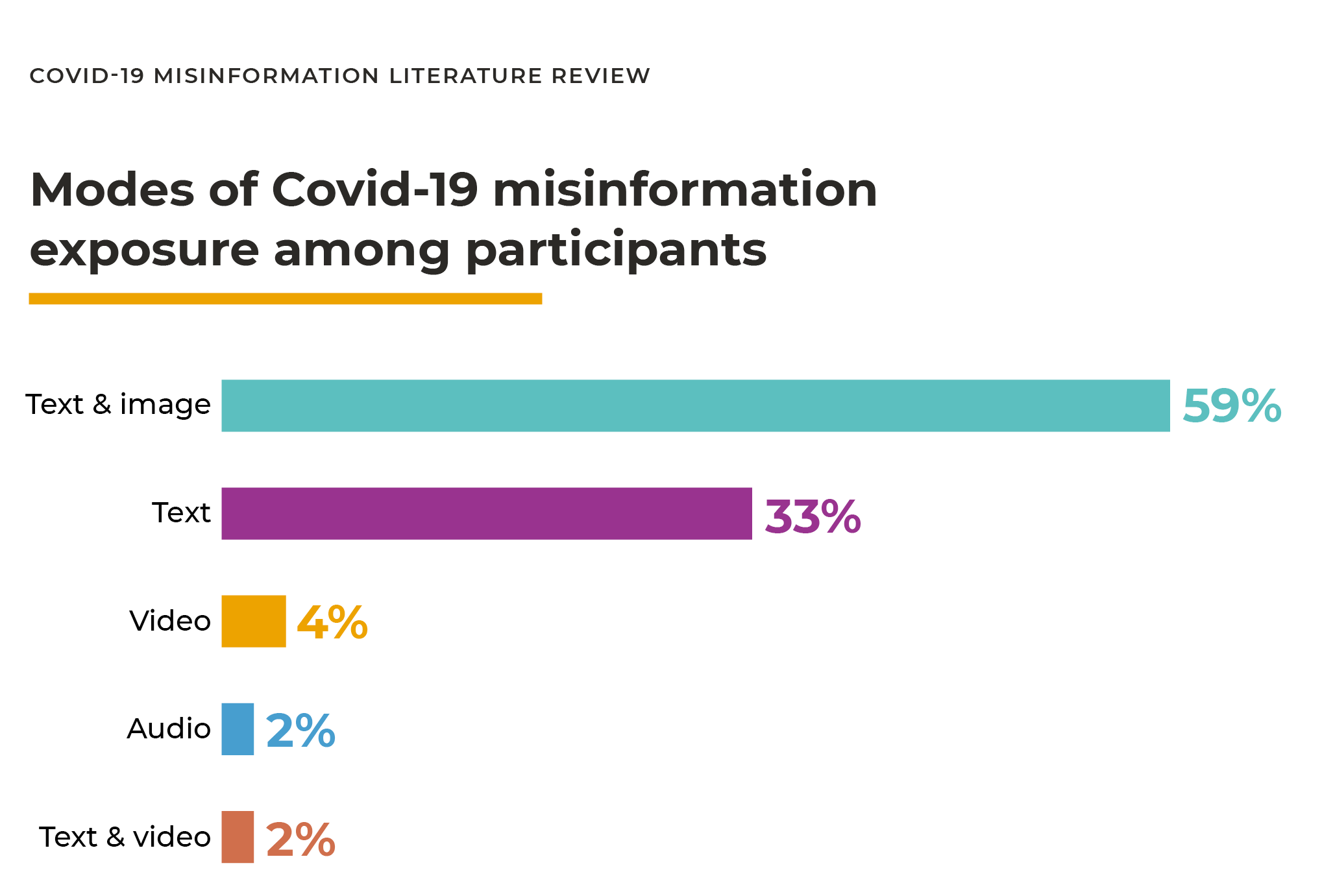

Only 6% of studies exposed participants to any video content (video only or video and text), despite the rising influence of video content.

We extracted the modalities in which COVID-19 misinformation was presented to participants in interventions. This allows us to see that research is heavily focused on text and image/text combinations, pointing to an urgent need for more research on the impact of video-based health misinformation.

Study participants were most frequently exposed to misinformation related to COVID-19 prevention, treatment and diagnostics (52%), followed by vaccine misinformation (42%).

In our review, we extracted the misinformation topics used in interventions – in other words, the content that was used to stimulate a response from participants.

The most frequently used topics of vaccine misinformation specifically were:

Safety, efficacy and necessity (36%)

Conspiracy theories related to vaccines (9%)

Vaccine misinformation related to morality and religion (8%)

Covid-19 Misinformation Topics Used to Code Stimuli

Prevention, Treatment, and Diagnostics

Misinformation on false prevention techniques, treatment methods, and diagnostics of COVID-19. Some examples found in the literature review include, “Inhaling vinegar can treat Covid-19 symptoms by clearing sputum from airways” and “Hot steam and tea cure coronavirus”

Vaccines

Misinformation bucketed into this category emphasized false claims about Covid-19 vaccines. These misleading vaccine claims were further categorized into separate topics. The next table specifies the Covid-19 vaccine misinformation topics.

Public Preparedness and Public Service Warnings

This refers to misinformation that included false claims about public health measures (e.g., social distancing and quarantines), hospitals and medical systems, and public authorities. Examples from our study include, “Lockdowns are due to healthy people getting tested” and “The CDC lowered the PCR threshold while no longer recording asymptomatic cases…but only for the vaccinated.”

Conspiracies

Posts containing well-established conspiracy theories. A conspiracy often features a secret, proactive, planned, multi-stage deception that will involve the harm of a given individual or group of people, often to the benefit of another. Examples from our study include, “Bill Gates Outlines Plan To Depopulate The Planet.”

Politics, Economics and Public Authority

This misinformation involves inaccurate, misleading or false facts, figures, or statements about key actors in the pandemic (political or public authority figures, governments, countries, institutions, corporations, etc.) Examples include: “The Centers for Disease Control and Prevention is reporting all pneumonia and influenza deaths as caused by COVID-19” or “Texas Supreme Court ruled today No Voting By Mail due to Covid”.

Conspiracy theories are often political in nature — there are many examples of political misinformation that would be considered a conspiracy theory. However, from the misinformation stimuli we gathered from the reviewed studies, there were many examples of political misinformation that were not conspiracy theories (i.e. they were not well-established and they did not involve secret, proactive, planned, multi-stage deceptions that involve the harm of a given individual or group of people, often to the benefit of another. They were simply exaggerations or misleading claims about a country’s policy, the public health measures encouraged by a public authority, or the economic ramifications of certain policies. As such, we chose to create two topic buckets “Conspiracies” and “Politics, Economics, and Public Authority” in order to delineate between these two kinds of misleading content.

Virus Attributes

This refers to misinformation about properties of Covid-19 itself – how it gets transmitted, possible origins, mortality rates, etc. Examples include, “The coronavirus has the same mortality rate as the seasonal flu virus” and “New data: COVID-19 less deadly than the flu.”

General Misinformation

General misinformation refers to misinformation that mentioned Covid-19 or the pandemic, but did not address any particular aspect of the crisis. For example, “Segment shows actors were used in Australian Covid-19 awareness campaign.”

Covid-19 Vaccine Misinformation Topics

Development, Access and Provision

Posts related to the ongoing progress and challenges of vaccine development. This also includes posts concerned with the testing (clinical trials) and provision of vaccines as well as public access to them. Example: “COVID-19 vaccine was approved too quickly.”

Safety, Efficacy and Necessity

Posts concerning the safety and efficacy of vaccines, including how they may not be safe or effective. Content related to the perceived necessity of vaccines also falls under this topic. For example, “The vaccine causes severe complications”

Politics, Economics and Public Authority

This misinformation involves inaccurate, misleading or false facts, figures, or claims about key actors involved with vaccines (political or public authority figures, governments, countries, institutions, corporations, etc.) For example: “Biden Orders Dishonorable Discharge for 46% of Troops Who Refuse Vaccine.”

Conspiracy

Posts containing well-established conspiracy theories involving vaccines. For example, “WATCH: Bill Gates admits his Covid-19 vaccine might kill nearly 1m people.”

Liberty and Freedom

Posts pertaining to concerns about how vaccines may affect civil liberties and personal freedom. For example, “The COVID-19 vaccination is mandatory.”

Morality and Religion

Posts containing moral and religious concerns around vaccines, such as their composition and the way they are tested. For example, “Covid-19 vaccines made with aborted fetuses.”